Resource assessment exercises: standard error and confidence intervals

| sorry: |

This section is still under construction! This article was last modified on 06/23/2014. If you have comments please use the Discussion page or contribute to the article! |

We have drawn one SRSwoR from \(U\). What if we take another sample?

S1 <- sample(trees10$dbh, 2) mean(S1) ## [1] 21.5 S2 <- sample(trees10$dbh, 2) # once more... mean(S2) ## [1] 20.5 S3 <- sample(trees$dbh, 2) # and again... mean(S3) ## [1] 21.5

Each time we take a sample, the estimated mean differs. How many different SRSwoR can be drawn from \(U\), when \(N=10\) and \(n=2\)? The set of all possible samples is given by

| $\Omega=\binom{N}{n}\quad\text{here}\quad \Omega=\binom{10}{2}=45$ | 1 |

Thus, in theory we can draw 45 different samples from our small population \(U\), and, thus, could estimate 45 different means. Here is a list of all means that we can compute for our small example population (\(n=2\)),

means <- colMeans(combn(x = trees10$dbh, m = 2)) means ## [1] 15.5 13.0 17.5 20.5 14.0 28.0 30.0 19.5 22.5 16.5 21.0 24.0 17.5 31.5 33.5 ## [16] 23.0 26.0 18.5 21.5 15.0 29.0 31.0 20.5 23.5 26.0 19.5 33.5 35.5 25.0 28.0 ## [31] 22.5 36.5 38.5 28.0 31.0 30.0 32.0 21.5 24.5 46.0 35.5 38.5 37.5 40.5 30.0

What the function

What the function combn()does- the function

combn(x, m)generates all combinations ofnelementsx, takenmat a time. The output is anarray. The functioncolMeanscomputes the means of all columns. The functionrowMeans(), in turn, computes the means of columns.

What is the mean of these means?

mean(means) ## [1] 26.5

The population mean, i.e., true mean, is:

mean(trees10$dbh) ## [1] 26.5

The mean of all estimable means and the parametric mean match. We say that the estimator is unbiased. Note that the mean of a single sample is called an estimate. The formula we use to compute this estimate is called an estimator. Also note that an estimate can, by definition, not be biased, it is the estimator that is potentially biased.

When we look at the possible mean estimates we see that they vary over different samples. The variance of the means is, for a given sample size \(n\), defined as

| $\text{var}(\bar{y})=\frac{\sigma^2}{n_{\bar{y} } }$ | 2 |

,

where \(n_{\bar{y}}\) is the number of estimable means (here, \(n_{\bar{y}}=45\)). The square-root of equation ([eeq:varmeans]) gives the parametric standard error,

| $\sqrt{\text{var}(\bar{y})}=\frac{\sigma}{\sqrt{n_{\bar{y} } } }$ | 3 |

.

Both estimators, 2 and 3 are not used in practise, because we do not observe all possible samples but only one. For a single sample, the variability of the mean over all possible samples can be estimated. The standard error of the mean is defined as,

| $s_{\bar{y} }=\frac{s}{\sqrt{n} }$ | 4 |

.

Note the difference between \(s_{\bar{y}}\) and \(s\). The first one estimates the variability of the mean among different samples, whereas the latter estimates the variability of the values \(y_{i\in S}\), that is, the within sample variability.

Frequently, the standard error is reported in relative terms (and percent),

| $\text{rel. }s_{\bar{y} }(\%)=\frac{s_{\bar{y} } }{\bar{y} }\times 100$ | 4 |

.

sqrt(pvar(means)) # empirical standard error ## [1] 7.782 sd(S)/sqrt(2) # estimated standard error of the mean ## [1] 2.5

We can use the estimated standard error of the mean to construct confidence intervals,

| $\mathbb{P}\bigg(\bar{y} - s_{\bar{y} }\times t_{1-\frac{\alpha}{2},n-1} \leq \mu_y \leq \bar{y} + s_{\bar{y} }\times t_{1-\frac{\alpha}{2},n-1}\bigg)=1-\alpha.$ | 5 |

The value for \(\alpha\) is frequently set to 0.05 (but does not need to be!). Here, \(t\) refers to the \(t\)-distribution, and \(n-1\) gives the degrees of freedom. In R:

n <- 2 lower.limit <- mean(S) - sd(S)/sqrt(n) * qt(p = 0.975, df = n - 1) upper.limit <- mean(S) + sd(S)/sqrt(n) * qt(p = 0.975, df = n - 1) lower.limit ## [1] -15.27 upper.limit ## [1] 48.27

What the function

What the function qt()does- The function

qt(p, df)provides the quantiles of a $t$-distribution. Bypthe quantiles are specified. The argumentdfgives the degrees of freedom, e.g., $n-1$.

Our estimated mean is 16.5 \(\pm\) 31.77 cm. The interpretation of a confidence interval is as follows (for \(\alpha=0.05\)): 95% of the confidence intervals we can construct from all possible samples will contain the true population mean, \(\mu_y\).

Our estimated standard error is much smaller than the empirical standard error. It seems like we have drawn a sample in which the values do not differ much. Of course, our population and sample is very small. Estimates based on a sample of size \(n=2\) might not be very reliable. We will, therefore, take a look at the entire “forest”. The population \(U\) now has \(N=30,000\) elements. Our new sample size will be \(n=50\). This might be a somewhat more realistic situation.

N <- nrow(trees) n <- 50 S <- sample(trees$dbh, size=n) S ## [1] 22 8 18 43 21 44 17 25 32 10 11 17 9 10 56 14 14 10 20 8 37 14 55 29 33 ## [26] 17 10 15 29 8 21 9 9 24 21 28 19 58 16 16 15 20 5 9 14 30 11 9 12 27

What the function

What the function nrow()does- The function

nrow()provides the number of rows of adata.frameormatrix. The functionncol()provides the number of columns.nrow()does not work onavector. Uselength()instead. For adata.frameormatrix, the functiondim()provides both, columns and rows.

We calculate a couple of population parameters for the variable dbh:

mean(trees$dbh) ## [1] 21.05 pvar(trees$dbh) ## [1] 165 sqrt(pvar(trees$dbh)) ## [1] 12.84 sqrt(pvar(trees$dbh))/mean(trees$dbh) ## [1] 0.6102

We will not compute the empirical standard error. Why? There are too many samples possible. Even with modern computers this would take very long.

choose(30000, 50) ## [1] 2.266e+159

What the function

What the function choose()does- The function

choose(n, k)returns binomial coefficients, e.g., $\binom{10}{2}=45$, where $n=10$ and $k=2$.

We will look at “only” 10,000 samples. For each sample of size \(n=50\) we estimate the mean (You do not have to understand what the code does).

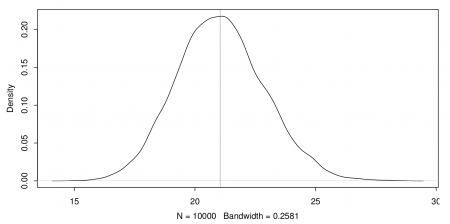

Figure [fig:means] shows the distribution of the 10,000 estimated means.

means <- vector()

for (i in 1:10000) {

means <- append(means, mean(sample(trees$dbh, 50)))

}

Next, we will estimate the population parameters from above using the data from the sample of size \(n=50\). Try to figure out the meaning of the code.

mean(S) ## [1] 20.58 var(S) ## [1] 167.8 sd(S) ## [1] 12.96 sd(S)/mean(S) ## [1] 0.6295 sd(S)/mean(S) * 100 ## [1] 62.95 sd(S0/sqrt(n) ## [1] 1.832 sd(S)/sqrt(n)/mean(S) * 100 ## [1] 8.903 mean(S) - sd(S)/sqrt(n) * qt(0.975, n - 1) ## [1] 16.9 mean(S) + sd(S)/sqrt(n) * qt(0.975, n - 1) ## [1] 24.26