Accuracy assessment

| Line 27: | Line 27: | ||

== Implementation in QGIS == | == Implementation in QGIS == | ||

| − | === GRASS === | + | === GRASS === <!--r.kappa--> |

| − | <!--r.kappa--> | + | The GRASS Toolbox provides a tool named {{mitem|text=r.kappa}}, which assess the accuracy with the use of '''two raster files'''. |

| + | Besides the confusion matrix, different kappa values are calculated. | ||

| + | # Open {{mitem|text=Toolbox --> GRASS --> Raster --> r.kappa}}. | ||

| + | # Set the classified map as {{button|text=Raster layer containing classification result}}. | ||

| + | # Set the training data as {{button|text=Raster layer containing reference classes}}. | ||

| + | # Save the output file under {{button|text=Error matrix and kappa}}. | ||

| + | |||

=== Orfeo Toolbox === | === Orfeo Toolbox === | ||

<!--ComputeConfusionMatrix--> | <!--ComputeConfusionMatrix--> | ||

Revision as of 12:37, 2 February 2016

| sorry: |

This section is still under construction! This article was last modified on 02/2/2016. If you have comments please use the Discussion page or contribute to the article! |

For decision making, based on a map, the map has to be accurate or, at least, the accuracy has to be known.

Therefore, accuracy assessment gives information about the quality of a map created by remotely sensed data.

Besides the quality, an accuracy assessment is realised to identify possible sources of errors and as an indicator used in comparisons (e.g. of algorithms).

Contents |

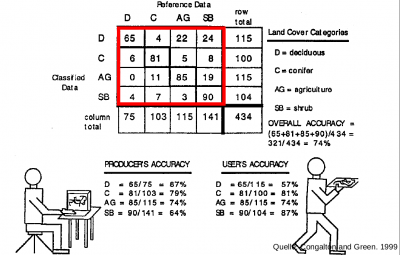

Confusion Matrix

A confusion matrix compare the relationship between known reference data and the results of the classification, based on the classes.

There are several characteristics that can be obtained by a confusion matrix:

- Classification errors of omission (exclusion) and commission (inclusion)

- Number of elements that are wrongly excluded or included to a certain category

- Overall accuracy

- Indicates the quality of the map classification

- Can be calculated by dividing the total number of correctly classified pixels by the total number of reference pixels

- Producer's accuracy

- Indicates the quality of the classification of training set pixels

- Can be calculated by dividing the correctly classified pixels in each category by the number of training set pixels of the corresponding category

- User's accuracy

- Indicates the probability that prediction represent reality

- Can be calculated by dividing the correctly classified pixels in each category by the total number of pixels that were classified in that category

It has to be recognized, that the confusion matrix is based on training data and, therefore, this procedure only indicates how well the statistics extracted from these areas can be used to cateogrize the same areas.

Implementation in QGIS

GRASS

The GRASS Toolbox provides a tool named r.kappa, which assess the accuracy with the use of two raster files. Besides the confusion matrix, different kappa values are calculated.

- Open Toolbox --> GRASS --> Raster --> r.kappa.

- Set the classified map as Raster layer containing classification result.

- Set the training data as Raster layer containing reference classes.

- Save the output file under Error matrix and kappa.

Orfeo Toolbox

Semi-automatic classification plugin

Literature

Congalton, R. G. & Green, K. (2008). Assessing the accuracy of remotely sensed data: principles and practices. CRC press.

Lillesand, T., Kiefer, R. W. & Chipman, J. (2008). Remote sensing and image interpretation. John Wiley & Sons.