Ratio estimator

(→Estimators) |

(→Efficiency) |

||

| Line 117: | Line 117: | ||

For the ratio estimator we can formulate from the above estimator of the error variance of the estimated mean | For the ratio estimator we can formulate from the above estimator of the error variance of the estimated mean | ||

| − | :<math>\ | + | :<math>\roh\ge\frac{R\sigma_x}{2\sigma_y}=\frac{cv(x)}{2cv(y)}\,</math> |

where <math>cv()</math> denotes the coefficient of variation either for the target variable <math>Y</math> or the ancillary variable <math>X</math>. | where <math>cv()</math> denotes the coefficient of variation either for the target variable <math>Y</math> or the ancillary variable <math>X</math>. | ||

Revision as of 14:03, 14 April 2021

There are situations in forest inventory sampling in which the value of the target variable is known (or suspected) to be highly correlated to an other variable (called co-variable or ancillary variable). We know from the discussion on spatial autocorrelation and the optimization of cluster plot design that correlation between two variables means essentially that the one variable does yet contain a certain amount of information about the other variable. The higher the correlation the better can the value of the second variable be predicted when the value of the first is known (and vice versa). Therefore, if such a co-variable is there on the plot, it would make sense to also observe it and utilize the correlation to the target variable to eventually improve the precision of estimating the target variable. This is exactly the situation where the ratio estimator is applied.

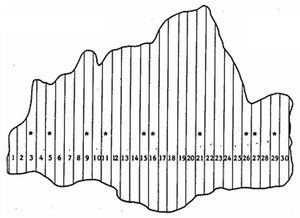

A typical and basic example is that of sample plots of unequal size, as depicted, for example in Figure 1 where strips of different length constitute the population of samples. Another example would be clusters of unequal size: while cluster sampling only deals with the case of clusters of equal size, the ratio estimator allows to take into account the differently sized sample plots. Here, obviously, the size of the sample plots is the co-variable that needs to be determined for each sample plot. That a high positive correlation is to be expected between most forestry-relevant attributes and plot size should be obvious: the larger the plot the more basal area, number of stems, volume etc. is expected to be present.

In fact, the ratio estimator does not introduce a new sampling technique: we use here the example of simple random sampling (as sampling design). The ratio estimator introduces two new aspects in respect to:

- plot design: observation of an ancillary variable on each plot in addition to the observation of the target variable.

- estimation design: the ratio estimator integrates the ancillary variable into the estimator.

Side-comment:

Side-comment:

- For those who have some knowledge or interest (or both) on the design of experiments and the linear statistical models used there (analysis of variance) it might be interesting to note that also in the field of experimental design there is a technique in which a co-variable is observed on each experimental plot in order to allow a more precise estimation of the effects of treatments: this technique is called analysis of co-variance.

The ratio estimator is called ratio estimator because, what we actually estimate from the sample plot is a ratio: for example, number of stems per hectare. The ancillary variable (“area” in this case) appears always in the denominator of the ratio. We denote the variable of interest as usual with \(y_i\) and the ancillary variable as \(x_i\); some more on notation is given in double sampling.

in simple words:

in simple words:

- The ratio estimator involves the ratio of two variates. Imagine there are two characteristics (say x and y) we can observe for each sampled element and we have in addition information about the total of x, then the total of y can be estimated by multiplying the known total of x by a ratio of both variables. This ratio can be estimated from the means of both variables that are observed for the sampled elements.

Contents |

Notation

\(R\,\) Parametric ratio, that is the true ratio present in the population; \(\mu_y\,\) Parametric mean of the target variable \(Y\); \(\mu_x\,\) Parametric mean of the ancillary variable \(X\); \(\tau_x\,\) Parametric total of ancillary variable \(X\); \(\rho\,\) Parametric Pearson coefficient of correlation between \(Y\) and \(X\); \(\sigma_y\,\) Parametric standard deviation of the target variable \(Y\); \(\sigma_x\,\) Parametric standard deviation of ancillary variable \(X\); \(n\,\) Sample size; \(r\,\) Estimated ratio; \(y_i\,\) Value of the target variable observed on the \(i^{th}\) sampled element (plot); \(x_i\,\) Ancillary variable, e.g. area, for \(i^{th}\) plot; \(\bar y\,\) Estimated mean of target variable; \(\bar x\,\) Estimated mean of ancillary variable; \(\hat{\tau}_y\,\) Estimated total of target variable; \(\hat{\rho}\,\) Estimated coefficient of correlation of \(Y\) and \(X\); \({s_y}^2\,\) Estimated variance of target variable; \({s_x}^2\,\) Estimated variance of ancillary variable.

With the notation for the ratio estimator it should, above all, be observed, that the letters \(r\) and \(R\) are used here for ratio – and not for the correlation coefficient. That causes some times confusions. We use the Greek letter \(\rho\) (rho) for the parametric correlation coefficient and the usual notation for the estimation using the “hat” sign \(\hat{\rho}\).

Estimators

In the first place, what we estimate with the ration estimator (as the name yet suggests) is the ratio \(R\) which is target variable per ancillary variable, for example: number of trees per hectare. From this, we can then also estimate the total of the target variable.

The parametric ratio is

\[R=\frac{\mu_y}{\mu_x}\,\]

where this is estimated from the sample as

\[r=\frac{\bar{y}}{\bar{x}}=\frac{\sum_{i=1}^n y_i}{\sum_{i=1}^n x_i}.\,\]

It is very important to note here that we calculate a ratio of means and NOT a mean of ratios! The ratio is calculated as the mean of the target variable divided by the mean of the ancillary variable; which is equivalent to calculating the sum of the target variable divided by the sum of the ancillary variable. It would be erroneous to calculate for each sample a ratio between target variable and ancillary variable and compute the mean over all samples! For that situation (the mean of ratios) there are other estimators which are more complex!

The estimated variance of the estimated ratio \(r\) is

\[\hat{var}(r)=\frac{N-n}{N}\frac{1}{n}\frac{1}{{\mu_x}^2}\frac{\sum_{i=1}^n\left(y_i-rx_i\right)^2}{n-1}.\,\]

An the estimated total derives from

\[{\tau}_y=r{\tau}_x\,\]

with an estimated error variance of the total of

\[\hat{var}(\hat{\tau}_y)={\tau_x}^2\hat{var}(r),\,\]

as usual.

Given the estimated ratio \(r\) the estimated mean derives from

\[\bar{y}_r=r\mu_x\,\]

We see here: for the estimation of the mean we need to know the true value of the ancillary variable. In cases such as the one in Figure 2 this is easily determined, because the total of the ancillary variable is simply the total area. However, in other situations where, for example leaf area is taken as ancillary variable to estimation leaf biomass, this is extremely difficult. However, there are corresponding sampling techniques that start with a first sampling phase estimating the ancillary variable before in a second phase the target variable is estimated; these techniques are called double sampling or two phase sampling with the ratio estimator.

The estimated mean carries an estimated variance of the mean of

\[\hat{var}(\bar{y}_r)={\mu_x}^2\hat{var}(r)=\frac{N-n}{N}\frac{1}{n}\left\{{s_y}^2+r^2{s_x}^2-2r\hat{\rho}{s_x}{s_y}\right\}\,\]

This latter estimator of the error variance of the estimated mean illustrates some basic characteristics of the ratio estimator very clearly:

If all values of the ancillary variable are the same (for example, when using plot area as ancillary variable in fixed area plot sampling), then \({s_x}^2\) is zero and the estimator is identical to the error variance of the mean estimator in simple random sampling where the only source of variability that enters is the estimated variance of the target variable \({s_y}^2\). Also, we see in the above term that we need to make \(r\hat{\rho}{s_x}{s_y}\) large so that a large term is subtracted from the variance terms in parenthesis; the only way to influence here is an intelligent choice of the ancillary variable. The ancillary variable should highly positively correlate with the target variable (there is not much we can do about the target variable except for changing the plot type and size; that will change the variance of the population – but still, because of the expected high correlation with the ancillary variable it will also affect the estimation of \(r\) and \({s_x}^2\) and, in addition, the error variance of \({s_y}^2\) the target variable appears with positive and negative sign in the above estimator. At the end, it is difficult to predict how a change in \({s_y}^2\) affects the performance of the ratio estimator.

Efficiency

In the preceding chapter, we saw in fairly general terms that a high positive correlation between target and ancillary variable will make the variance smaller than the simple random sampling estimator (which would be there, if correlation would be zero). However, by re-arranging the above term, we can more specifically formulate in which situation the ratio estimator is superior to the simple random sampling estimator. As always, the relative efficiency is meaningful only when we deal with parametric values. Based on estimated values, the relative efficiency can be misleading.

For the ratio estimator we can formulate from the above estimator of the error variance of the estimated mean

\[\roh\ge\frac{R\sigma_x}{2\sigma_y}=\frac{cv(x)}{2cv(y)}\,\]

where \(cv()\) denotes the coefficient of variation either for the target variable \(Y\) or the ancillary variable \(X\).

As an interpretation of this inequality: the ratio estimator is superior to the simple random estimator if the correlation between the two variables exceeds the above expressions.

\[\frac{R\sigma_x}{2\sigma_y}\,\]

or

\[\frac{cv(x)}{2cv(y)}\,\]

There may be situations where a variable had been observed that might be used as an ancillary variable, but we are not sure whether to use the ratio estimator or not. Then, one could estimate the relative efficiency; although the conclusion might be misleading, there are cases with such high positive correlations that application of the ratio estimator is definitively indicated.

It is maybe interesting to note that - when having an ancillary variable measured and available: there is more then just one option for the estimation design, the ratio estimator and the simple random sampling estimator; and both are unbiased (or, in the case of the ratio estimator, approximately unbiased). Then, of course, one will choose the estimator with the smaller error variance, and a criterion for this is given above with the correlation between target and ancillary variable.

Ratio estimator sampling examples: Examples for Ratio estimator

Ratio estimator sampling examples: Examples for Ratio estimator

Characteristics of ratio estimator

When the ratio estimator is used, what we implicitly do is assuming a simple linear regression through the origin between target and ancillary variable\[\bar{y}=\bar{r}x\], where the ratio, \(r\), is the slope coefficient. The implicit assumption is that the relationship between \(Y\) and \(X\) goes really through the origin. In the above example with the plot area as ancillary variable, this is certainly true; if plot area is zero, then also the observation of number of stems or basal area is zero. However, if the relationship between \(Y\) and \(X\) is such that it does not generically pass through the origin, then, there will be an estimator bias.

When the intercept term of ratio estimator is 0, then, the estimators presented here will be unbiased. In contrary, if the intercept term is not equal to 0, e.g. when ancillary variable is zero but target variable is not, then, the estimator here will be biased for small sample size but approximately unbiased for large sample size.

Regression estimator

If, as addressed in the chapter above, the regression between \(Y\) and \(X\) does not go through the origin, then, one may use the so-called regression estimator which explicitly establishes a simple linear regression between target and ancillary variable. As we assume that, as usual in simple linear regression, the joint mean value \(\bar{x},\bar{y}\) lies on the regression line, we may estimate the mean of the target variable from the sample as

\[\bar{y}_L=\bar{y}+b\left(\mu_x-\bar{x}\right)\]

where \(b\) is the estimated regression coefficient and \(\mu_x\) is the population mean of ancillary variable which must be known.

The estimated variance of the estimated mean is

\[\hat{var}(\bar{y}_L)=\frac{N-n}{N}\frac{1}{n}\frac{1}{n-2}\left\{\sum_{i=1}^n(y_i-\bar{y})^2-b^2\sum_{i=1}^n(x_i-\bar{x})^2\right\}\,\]

where the term in curled parenthesis times the factor \(1/(n-2)\) is the mean square error of regression.

As with the ratio estimator, we assume here a simple linear regression model between the two variables. If this model holds (that is, if the relationship can realistically be modeled like that, then the regression estimator is unbiased. If this is not so, then we introduce an estimator bias of unknown order of magnitude. However, it is said that this bias is particularly relevant for small sample sizes and not so for larger sample sizes.

References

- ↑ de Vries, P.G., 1986. Sampling Theory for Forest Inventory. A Teach-Yourself Course. Springer. 399 p.

- ↑ Thompson SK. 1992. Sampling. John Wiley & Sons. 343 p.